Recently, I have been involved in a number of discussions with people who are setting up clusters of various Fusion Middleware products, often on an Exalogic machine. These discussions have led me to feel that it would be worth sharing some views on the ‘right’ way to design a cluster for different products. These views are by no means meant to be canonical, but I wanted to share them anyway as an example and a conversation starter.

I want to consider three products that are commonly clustered, and which have somewhat different requirements – SOA (or BPM), OSB and Coherence. Let’s take each one in turn.

SOA and/or BPM

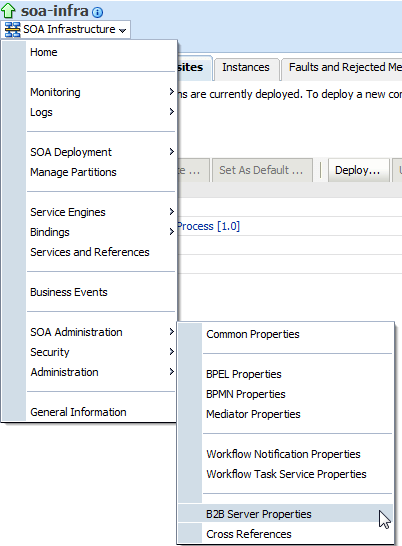

SOA and BPM support a domain with either exactly one (managed) server or exactly one cluster. You cannot have two (or more) SOA/BPM clusters in a single WebLogic domain. The SOA/BPM cluster is largely defined by the database schema, in particular the SOAINFRA schema, that each server is pointing too. All servers/nodes in a cluster must be pointing to the same SOAINFRA schema. And a SOAINFRA schema can only be used by nodes in a single cluster.

As an aside, if you were to point nodes from two SOA ‘clusters’ to the same SOAINFRA schema for some reason, you would basically end up with just one cluster – although it would be an unsupported configuration and a lot of things would break.

SOA/BPM clusters are usually created for one of two reasons – to add extra capacity, or to improve availability. It is important to understand that SOA and BPM do not support dual site active/active deployment, i.e. you cannot have two clusters, each with their own SOAINFRA across two data centres with any kind of database level replication. Within a single site though, all of the nodes are active and all share work between them.

To have a cluster, you need to have a load balancer in front of the SOA servers. This could be a hardware or software load balancer. It needs to be capable of distributing work across all of the nodes in the cluster. Ideally, it should also be able to collect heartbeats or response times and use that information to route new sessions to servers which seem to be least busy.

When a BPEL or BPMN process is executing in a cluster, any time there is a point of asynchronicity, e.g. an invoke, onAlarm, onMessage, wait, catch event, receive task, explicit call to checkpoint(), etc., the process instance will be dehydrated. It is possible, and even likely, that the process will be rehydrated later on a different node in the cluster. The design of SOA/BPM means that any node can pick up any process instance and continue from where it last dehydrated. This makes it easy to dynamically resize the cluster, by adding and removing nodes, which will automatically take their share of the work. It also makes handling the failure of a node in a cluster particularly straightforward, as the load balancer will notice that node has failed and stop routing it work.

You also need to make sure that all of your callbacks are using the (virtual) IP address of the load balancer, not of any particular server in the cluster. This means that all callbacks can be handled by any node in the cluster.

As your SOA/BPM workload grows, you basically want to scale up your cluster by adding more nodes. Any decision to split the cluster would most likely be based on separation of different business workloads, perhaps for reasons of maintainability – different release cycles, timing of patches, etc., rather than any technical reason to split the cluster.

I think the most important factor here is to realize that when you run multiple SOA clusters, they will each be in a different WebLogic domain, they will each have their own SOAINFRA (and other) schemas, they will have different addresses, and they will be running different workload, i.e. different composites.

It is really important to understand that it is not possible to have a particular process instance run part way on one cluster, and then complete on a different cluster – as the two clusters would have two totally separate SOAINFRA databases, and things like callbacks, process instance ID’s, messages, etc., would all be different.

So I think from the point of view of starting a clustered deployment, the simplest approach is to just build a single SOA cluster. This will greatly reduce the administrative overhead of dealing with multiple clusters.

OSB

Now let’s consider OSB. It is a little different to SOA/BPM. It does not use a database to store state, as it is designed to handle stateless, short lived operations, so we don’t need to worry about sharing database schemas.

It turns out what we do need to worry about is how many artifacts we are deploying in the OSB cluster. When you start up OSB, it goes through a process of ‘compiling’ all of the artifacts – that is the WSDLs, XSDs and all that – essentially into objects in memory. If there are a lot of artifacts, or if they are particular complicated – they have a lot of attributes for example – or if they contain nested references, this ‘compilation’ can start to take a long time. And the resulting data in memory can start to take up a lot of the heap.

A good rule of thumb is to say you probably want no more than 500 or so artifacts in a single OSB cluster. Otherwise, the startup times become so long that outages to restart are prohibitively long. Imagine if you had to wait an hour to start up your OSB cluster for example – would that be too long? The amount of memory in the heap required to store all of these also grows, so you would end up with a whole bunch of memory hungry servers that take forever to start – not ideal at all.

So the best approach, in my opinion, is to split up your OSB workload into several OSB clusters – with each one having a reasonable amount of artifacts on it. You can work out what is reasonable for you by looking at the startup time and the memory needs.

Now the next logical question is how do they talk to each other? What if a proxy services on cluster A needs to talk to a business service on cluster B? I have heard various approaches including departmental and enterprise service buses (hierarchical), and deploying all business services on all clusters, but splitting up the proxy services, or vice versa, and so on.

I think the best approach here is to have all request to OSB route through a load balancer, and use a simple set of rules on the load balancer to route to the correct cluster based on the service URL. If you create small enough groups of services under different URL paths, this also makes it easy for you to relocate services between OSB clusters if necessary for any reason, without any impact to any of your service consumers. This also makes it easy for services to talk to each other regardless of which cluster they are deployed on.

A good way to split things across the clusters, in my opinion, is to put critical things on their own clusters first, then basically divide up everything else across other clusters. Having critical things on their own clusters helps you to manage their availability, patching, performance, etc., individually and prevents the situation of being unable to update something because it is in an environment shared with a critical component that cannot tolerate the update.

Coherence

Now we come to Coherence, which is different again. As a general rule of thumb, it is ideal to have Coherence nodes have no more than 4GB of heap. The data in Coherence clusters tends to stay around, so they are tuned differently to SOA/BPM (for example) where most of the data is short lived and rarely tenured.

For Coherence, having more nodes in the cluster is usually a good thing. The other question is whether to split up Coherence clusters. Again, I think the (most) right answer here is to make that decision in terms of separating logic business functionality when it makes sense. Unless of course you get a really big cluster, then you might start to have some technical reasons to look at splitting it up. But I only know of a couple of organizations who have Coherence clusters anywhere near big enough for that to be a concern.

A word about the Exalogic Enterprise Deployment Guide

A lot of Exalogic customers refer to the example topology in the Enterprise Deployment Guide. That example is well suited to a large Java EE application deployed across a cluster of WebLogic Servers and Coherence nodes. I think the EDG makes it pretty clear that this example is not meant to be for all scenarios, and I think when we consider SOA/BPM, OSB and Coherence, there are some compelling reasons why we might choose to go with a slightly different approach.

For example, if we just blindly followed that same approach for SOA and OSB clusters, we would probably end up with resource contention issues – not enough memory available and not enough cores available to run the number of JVMs we might come up with.

Recommended approach

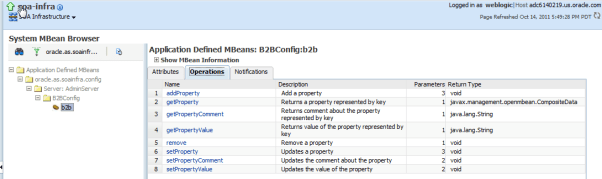

Let’s pretend we have six machines on which to build our environment. This could equally be six compute nodes in an Exalogic, or just six normal machines, it does not really matter for our purposes here. For arguments sake, let’s say each one has 12 cores and 96GB of memory.

I think now is a good time for a picture!

Here are some important things to note about this approach:

- It does not make a lot of sense to have more JVMs than cores because they will just end up competing with each other for system resources. So in the approach above we have no more than 9 JVMs on any compute node (1 SOA, 1 AdminServer, 2 OSB, 4 Coherence and 1 NodeManager (not shown but running on every compute node). We could probably fit more, but as we will see later memory is also an important consideration. Also, keep in mind that the operating system is going to use some of those cores as well, so you can’t really afford to allocate them all to JVMs.

- Let’s say we allocate 16GB of heap to each SOA and OSB managed server and 4GB to each Coherence server. That means that with just these JVMs, we are potentially consuming 64GB of memory on each compute node. This is two thirds of the available memory, a good rule of thumb high water mark. Remember that there is also going to be other processes using memory, including the operating system, and of course, unless you are running JRockit, the JVM is going to have a permanent generation too, which will take up more memory. Maybe 16GB is too high – you don’t have to use up all the memory you have of course, but I guess this is really going to depend on the nature of the workload, and as I said at the beginning, I am not trying to make a one size fits all recommendation here.

- The AdminServers for the various clusters are striped across the compute nodes. The cluster can of course survive the loss of the AdminServer and it can be restarted by a NodeManager on another compute node. But it just makes good sense to put them on different machines, so that in the event of the failure of one compute node, you would only lose one or zero of them, not all of them at once.

- All of the URLs that consumers use point to the load balancer – whether those consumers are on these compute nodes or external. The load balancer decides where traffic is routed. If we found that our payments and core services could no longer fit in a single OSB cluster, we could move one to the other cluster, or to a new cluster altogether without any impact on consumers. All we would need to do is update the routing rule in the load balancer.

- All clusters are stretched across all compute nodes. The idea here is to be able to get the best possible use of the available resources. Of course this could be tuned to suit the actual workload and nodes may be added or removed. Some managed servers may not be running, but the point is that each cluster (product) has the ability to run across all nodes. So if any node were lost, it would not matter, all nodes are essentially equal.

Let’s consider for a moment an alternative. Suppose we rearranged the OSB clusters so that all of the managed servers in OSB Cluster A are on compute nodes CN01, CN02 and CN03, and all the managed server in OSB Cluster B are on the other three. What would happen if we needed OSB Cluster A to have more capacity? Or what would happen if we lost CN01, or, god forbid, CN01, CN02 and CN03 all at once? OSB Cluster A would be under-resourced in the former case, or completely unavailable in the latter. We could not easily just start up OSB Cluster A on the remaining nodes, or add another server on one of those nodes. This would require some manual effort – reconfiguration at the least, and possibly redeployment as well.

I think a key measure of the quality of an architecture is its simplicity. The simplest architectures are the best. No need to make things any more complicated than they need to be. Complex architectures just introduce more opportunity for error and more management cost and inflexibility.

Another good test is flexibility. This approach does not impose any arbitrary limits on how you could deploy your applications.

Availability is another factor to consider, and I think the approach described provides the best possible availability across the whole system from the available hardware – and the best hardware utilization as well.

What about patching?

Patching is a very important consideration, that should not be backed away from when designing your architecture. How do you patch a cluster like this? Especially if you cannot afford a long outage.

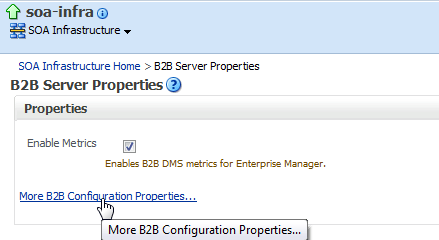

My suggested approach here is to have two sets of binaries, the active and the standby binaries, for each cluster. The clusters would be running on the active binaries. When you need to apply a patch to your production environment, after you have completed testing of the patch in non-production environments, of course, you should apply the patch to the standby binaries, and therefore to those domains created from the standby binaries, the standby domains.

Now, both the active domain and the standby domain (in the case of SOA) should be pointing to the same SOAINFRA database. You would never run them both at the same time of course. When the patching is completed, shut down the active domain and start up the (newly patched) standby domain, which is pointing at the same SOAINFRA and therefore will start up as logically the same cluster. Now it is the active cluster, and the other one is the standby. If it goes bad, just swap back. When you are ready, go patch the standby too, to keep them in sync.

[If there are any other ‘old mainframe guys’ reading this, you might note the similarity to the zones in SMP/E.]

Update: It seems that this approach still has some challenges, when you think about the fact that many patches might require running a database schema update, or a script (like a WLST script for example) which may require you to start up the server(s). So I think for now we really need to take full backups before applying patches so that we can roll back if needed. Although even then we need to make sure that we don’t let any messages come through when we are testing the patched domain, otherwise, we might loose work if we need to roll back!

What about patches that change the database schema? In this case you are going to have to schedule an outage to do the patching, so that you have the opportunity to backup the database before applying the patch. Trying to do it by just swapping would deprive you of the ability to roll back the patch by just swapping domains again.

Another important consideration is that there may be some files that need to be shared/moved/copied between the active and standby domains. It would be important to keep a tight grasp on all configuration changes to make sure that any changes made since the last swap are applied to the other domain when a swap occurs. It might be a good idea to swap weekly, just to make sure there is a formalized process around this, and things don’t get lost.

Summary

Well, that’s it. I would like to acknowledge that most of this was built up over the course of many conversations with many people. I certainly do not claim that all of this is my own original ideas, but rather a summary of the position I now hold based on many conversations with a bunch of smart folks. I would especially like to thank Robert Patrick for his ideas and many discussions on this topic. Also a special mention to Jon Purdy for his input on Coherence

As I said in the beginning, this is just my views and I would certainly be very interested to hear your feedback and to continue the discussion.

You must be logged in to post a comment.